After recently working with the HP Virtual Connect Flex10’s in a HP C7000 blade chassis I have decided to blog about a few of the things I have learnt.

What is a Virtual Connect Flex10?

OK I’m going to cheat on this bit, below I have included the HP video on VC Flex 10’s it does a very good job of explaining exactly what a VC Flex 10 is.

So what this means for us is that, for each Flex 10 connection in our blade server, it will offer us up to 4 network connections (Flex NICs) that can be fine tuned between 100Mb to 10Gb. With two Flex 10’s we can then ensure our networking is fully redundant. We are then able to uplink our Virtual Connect Flex 10’s with as little as 2 10Gb connections to our Core network infrastructure.

With the amount of NIC’s required in your average ESX build this makes FLEX10’s very attractive when deploying HP Blade Chassis.

HP Virtual Connect Flex-10 10Gb Ethernet Module

HP 1/10Gb-F Virtual Connect Ethernet Module

Firmware

The first thing you will need to do when using the Virtual Connects is upgrade the firmware, after a bit of searching on the internet I found there were numerous issues reported with older firmware.

Firmware for the Virtual Connect Flex 10’s can be found here >> http://h20000.www2.hp.com/bizsupport/TechSupport/DriverDownload.jsp?prodNameId=3201264&lang=en&cc=us&taskId=135&prodTypeId=3709945&prodSeriesId=3201263

I found the descriptions of the downloads very misleading and in the end found out I had to use the FC firmware even though I had an Ethernet module!

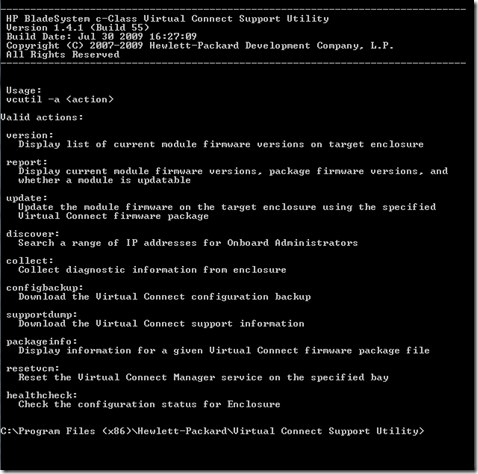

I found the easiest and most reliable way of doing this was to use the Virtual Connect c-Class Virtual Connect Support Utility. This is available from the HP download site

Once you have downloaded and installed the utility you will be able to use it to connect to your blade chassis and perform several tasks on your virtual connects.

If you have a current configuration on your Virtual Connects ensure you run a backup of the configuration first encase you run into any issues.

Once you have backed up the configuration you are able to apply the firmware update

I also found the Bay command useful when I had to only update 1 module on a replacement module after an initial failure.

My Scenario

In my scenario I was working with an HP C7000 blade chassis, numerous HL BL460c G6 server blades, two HP Virtual Connect Flex-10 10Gb Ethernet Modules and two non stacking Cisco switches. I was also working with iSCSI based storage. This is by no means a recommendation of hardware but simply what I had to work with in my scenario.

The HP BL460c G6 server blade comes with 2 x LOM (LAN on Motherboard) connections. This means we are able to provision 8 FlexNics in total, these would be spread over two virtual connects located in Interconnect bays 1 and 2.

Virtual Connect Configuration

In my scenario I would be using a single CX4 connection from each Virtual Connect to uplink to each of my core switches. As these two switches are not stacked together, only uplinked the virtual connect would be configured in an active / passive configuration for failover.

To accomplish this I created a single Virtual Connect domain with both virtual connects being members of this domain. A single Shared Uplink set was then configured which both CX4’s were part of. The relative VLAN’s in my network were then created inside the Virtual Connect Networks configuration. A server profile was then created for each ESX host, 8 FlexNics were configured for each host, as I like to tag the traffic on the ESX side, I configured the links to allow multiple connections and selected the relevant VLANs to be assigned to these NICS. The only exception to this was the vMotion NICs, these were configured with no uplink and were assigned directly to the the vMotion network that had been configured, as all the ESX hosts were located in the blade chassis this meant all the vMotion traffic could travel across the virtual connects.

A couple of points to consider when designing your Virtual Connect environment is that a VLAN may only connect to one FlexNIC per LOM. This means that you can run into difficulty if your service console is on your production network. As you wouldn’t be able to assign your production VLAN to your Service Console portgroup and to you Virtual Machine Network portgroup. To avoid this you can move your Service Console network onto a management VLAN and route as necessary between this VLAN and your production VLAN.

When assigning the FlexNICS they will be assigned in the following order.

| LOM:1-a |

| LOM:2-a |

| LOM:1-b |

| LOM:2-b |

| LOM:1-c |

| LOM:2-c |

| LOM:1-d |

| LOM:2-d |

All the FlexNICs configured from LOM:1 will connect through the Virtual Connect in bay 1 and all the FlexNICs configured from LOM:2 will connect through bay 2. This means that in a redundant configuration you can use every other FlexNIC to be a redundant adapter in your configuration.

ESX Configuration

Conveniently the Flex NIC configuration mentioned above matches up with the vmnics as follows

| LOM:1-a | vmnic0 |

| LOM:2-a | vmnic1 |

| LOM:1-b | vmnic2 |

| LOM:2-b | vmnic3 |

| LOM:1-c | vmnic4 |

| LOM:2-c | vmni5 |

| LOM:1-d | vmni6 |

| LOM:2-d | vmnic7 |

This meant I could easily split the FlexNics up into 4 Virtual Switches as follows.

vSwitch0 – Service Console Network – vmnic0, vmnic1 – Management VLAN

vSwitch1 – Virtual Machine Networks – vmnic6, vmnic6 – All LAN Traffic VLAN’s

vSwitch2 – vMotion Network – vmnic4, vmnic5 – vMotion VLAN

vSwitch 3 – iSCSI Network – vmnic2, vmnic3 – iSCSI VLAN

The real benefit of the Flex10 is the ability to be able customise the amount of traffic you are assigning to each FlexNic, I followed the Virtual Connect with vSphere white paper for the guidelines on amounts of bandwidth and they were assigned as follows through the virtual connect manager.

Service Console – 500Mb

Virtual Machines Networks – 3Gb

vMotion – 2.5 Gb

iSCSI – 4Gb

The final point is to make sure you are running the very latest driver for the FlexNICs within your ESX as previous versions of the driver had issues with reporting and acting on uplink failures.

http://downloads.vmware.com/d/info/datacenter_downloads/vmware_vsphere_4/4#drivers_tools

Cisco Switch Configuration

As per the Virtual Connect and Cisco whitepaper the switch ports were configured as follows

interface TenGigabitEthernet0/1

description “VC1 Uplink 1, Po1”

switchport trunk encapsulation dot1q

switchport trunk allowed vlan 2,3,4,5,6,7

switchport mode trunk

spanning-tree portfast trunk

Portfast is configured to allow quick failover between virtual connect in the event of a failure.

Diagram

I have put together the following diagram to help illustrate the configuration, unfortunately my Visio skills aren’t quite up to Hany Michaels over at www.hypervizor.com !

Download Diagram >> https://virtualisedreality.files.wordpress.com/2010/03/flex10-virtualconnect-vspherediagram.pdf

Improvements

Extra redundancy could of course have been offered by installing an addition mezzanine card into the blades, an additional 2 virtual connects would have also been required.

If a stacking switch was used an active / active configuration using LACP / Ether channel trunks could have been configured.

Resources

| Frank Dennemans Fantastic blog – Flex10 Lessons Learned | Go |

| Again Frank Dennemans Fantastic blog – Flex10 Lessons Learned | Go |

| HP Virtual Connect Flex-10 and VMware vSphere 4.0 | Go |

| HP Virtual Connect and Cisco Administration | Go |

| Kenneth van Ditmarsch has a large amount of Flex10 information which is really worth looking at | Go |

Good article. Can I point to it on the Blade Connect Community?

Of course you can, much appreciated.

Great, detailed post, many thanks. Quick question: how long did this work take you – not the blog post, but from getting your hands on the kit to producing a working system?

Will send you an Email Steve

How would you use this in a vSphere DVS or Cisco Nexus 1000v type scenario?

I would have to think about it in some more detail, but I dont see it would change much, you would just configure similar to the and the DVS uplink level for each host. Can’t really comment about the Nexus 1000v as have had no hands on with it yet but have heard some issues with using the Nexus 1000v and the Flex10 VC.

Hello,

Are the vmnics failover only? So in my portgroup policy, I specify a load balance policy of ‘Failover Only? as opposed to the way you do it in the non-virtual connect world where you use either originating port id or IP hash?

What I am trying to say is only one of the VC modules active at any one time…or could you create a load balanced team using LOM 1a and LOM 2a for example?

You are correct that in this design only one vc is alive at one time and will send all the traffic to the other vc. I personally didn’t choose to configure the virtual switching in this manner and used load balancing based on originating port I believe. You should use ip hash based load balancing as the vc doesn’t support lacp / 802.3 ad

I’ve also been working heavily with HP Flex-10 and ESX.

I’ve done a blog post on a Flex-10 design for ESX to throw some more information into the mix.

Great blog post Julian, thanks for sharing